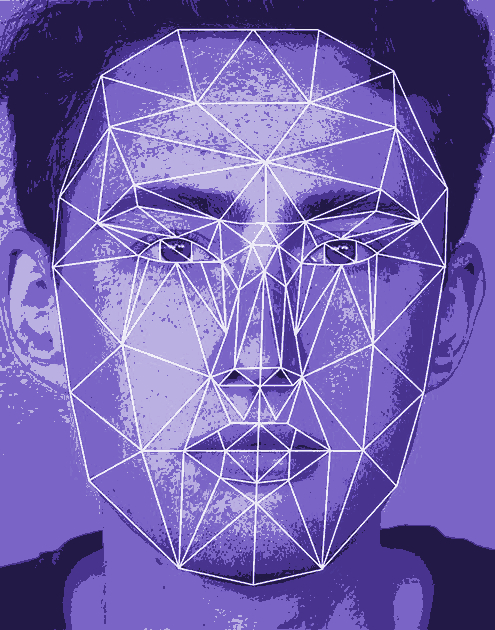

'Scope creep' surveillance warning

Reports say the Queensland Police face-matching software used during the Commonwealth Games was so rushed it was ineffective.

Reports say the Queensland Police face-matching software used during the Commonwealth Games was so rushed it was ineffective.

Reports obtained by the ABC under Right to Information laws reveal the QPS facial recognition system failed to identify any targets flagged as a high priority, and was later repurposed for general policing.

“Difficulties were experienced in data ingestion into one of the systems with the testing and availability not available until the week Operation Sentinel [the Games security operation] commenced,” says the evaluation report conducted by the Queensland Police Service (QPS) after the 2018 event.

“The inability of not having the legislation passed, both Commonwealth and state, in time for the Commonwealth Games reduced the database from an anticipated 46 million images to approximately eight million.”

After a few days, the technology was opened up to basic policing, and helped find just five identities out of 268 requested.

“Given the limited requests from within the Games, opportunity to conduct inquiries for the general policing environment was provided to enable better testing of the processes and capabilities,” the report stated.

Michael Cope from the Queensland Council for Civil Liberties warned of “scope creep”.

“It reminds people that all this legislation is always dressed up as trying to get bad people who are coming to murder us — it is not at all,” he said

“This just demonstrates that really the main use of this thing is not going to be to find people who might be potentially coming to cause mayhem and to kill people, but it's going to be to catch people who are committing ordinary mundane offences.”

QPS told reporters that there “were no problems experienced” with the mass surveillance software.

But the Police then blocked numerous requests for documents, until the Office of the Information Commissioner ruled it was in the public interest for the report to be released.

Queensland privacy commissioner Philip Green has concerns about future use of the technology.

“For law enforcement in the most serious crime prevention, in terrorism for instance, no-one's going to argue with it,” he said.

“But we do need, as a society, to look at impacts of the technology on a wider group of people to make sure that they're not being disadvantaged unfairly and that the technology use is proportionate and reasonable.

“Countries such as China have been reported for using this sort of technology for jay-walking offences, and even rationing toilet paper in public toilets.

“Minority groups have obviously been oppressed in countries where protections and human rights protections aren't in place — that's my broader concern.”

Print

Print